#1767130804

[ music ]

Put your hands on me

Billy Sane

Last post of the year

▄▄▄·▪ ▐ ▄ ▄▄▄ . ▐█ ▄███ •█▌▐█▀▄.▀· ██▀·▐█·▐█▐▐▌▐▀▀▪▄ ▐█▪·•▐█▌██▐█▌▐█▄▄▌ .▀ ▀▀▀▀▀ █▪ ▀▀▀

https://pine32.be - © pine32.be 2025

Welcome! - 107 total posts. [RSS]

A Funny little cycle 2.0 [LATEST]

#1767130804

Put your hands on me

Last post of the year

#1766588690

New go feature soon to be released in 1.26, the runtime/secret package.

secret.Do(func() {

// Some secrets that should not be in

// memory any time time long then need

})

As soon as this new Do function exits all registries, stack and heap values get deleted as soon as possible, even if a panic occurs. Great to see that Go is still adding features for better explicit control over memory, even though it still uses a garbage collector.

#1765830037

Wide-angle lens dump

A bit of stretch to call this photography but still

sqr_dump_6

#1765230859

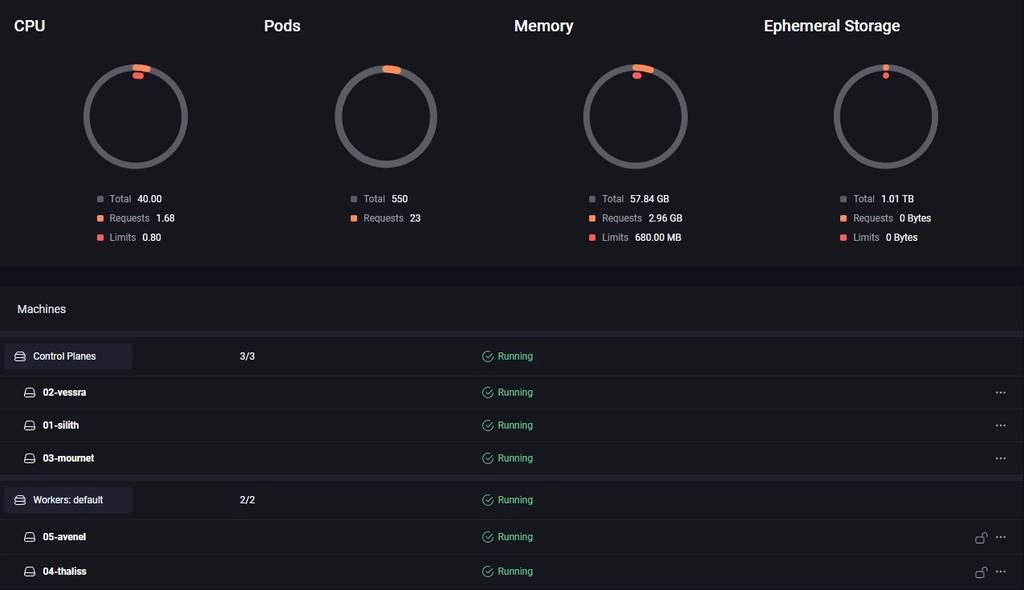

MB is now officially running on a highly available Kubernetes cluster. Don’t know if uptime is going to be better because I don’t have a real load balancer. Currently it is just using DNS. So if one node goes down I will need to remove a DNS record and hope propagates fast enough. Still better then one node, at least I have still some control.

Non-authoritative answer:

Name: mb.pine32.be

Address: 176.57.188.254

Name: mb.pine32.be

Address: 185.211.6.112

Name: mb.pine32.be

Address: 185.216.75.171

#1764875711

I’m setting up some new servers and was going over the usual hardening configs for ssh etc. Then I came across google-authenticator-libpam, a PAM module that allows you to secure ssh (or other things) with the standard 6 number time-based one-time password. It is created by Google but any authenticator app will work for this. It also has some build in rate limiting, I think this will scare of most automated attacks so I don’t think fail2ban will be needed. I will not be ssh-ing into these servers after the first setup (k8) so I don’t mind the extra auth step.

#1764269123

I was looking for a small web server for service static files bundled in a container image. Most people just use nginx because it fast and well maintained. But the base image is 68.6 MB! Even going to the alpine-slim instead of latest only gets us down to 5.27 MB. You can also use BusyBox to serve HTTP or even better compile your own BusyBox and strip everythong down as show in this blog. That gets use down to 154 KB. This is already a huge improvement but we can go down to double digits using darkhttpd, 62.8 KB. Bundling one image in your static site would already negate all the improvements of not using nginx but it is still cool to see that we still can get things done with something that would fit on a floppy disk.

#1763662379

I cast vicious mockery

#1762808104

My first bare metal Kubernetes cluster is finally online. It took a while and I tried way to many different things but I eventually ended up with Talos and Omni for the management interface.

My first plan was some fancy net boot setup with IPXE and a custom http/tftp server that managed custom configs for each server. That will install K3s onto MicroOS and join the cluster without ever attaching a keyboard to the server. This was all done with Ignition and Combustion scripts. It worked but was error prone and instable. And later I discovered a very similar project already existed called Matchbox. This uses CoreOS instead of MicroOS, which is almost the same but Fedora flavoured. On top of this K3s is not that simple to setup, its lightweight but not simple. So I was reinventing a shitty wheel. But to my credit, it did work.

Something similar but with NixOS was my 3rd plan but never got to it but I don’t think it would have worked that much better. A bit cleaner but still clunky.

So going back to Talos OS, which I underestimated at first. I thought it would be to frigid and require a lot of config. It does require some config but it is fully declarative so that was fine. But I was placentally surprised by the headless install via the http API. The install was also fast and as light as MicroOS + K3s. But still the CLI seemed error prone to me and bootstrapping everything was still a lot of manual work.

That is where Omni fills the gap. It was a pain to setup up with all the endpoints and certs that it requires (it also requires some form of SSO). But once that was done it was smooth sailing. You just create the installation media in the web interface and download the ISO (or even just copy over the PXE config in my case). And this setup is not specific for one node. You can use the same IMG on all the nodes and they will connect them self to the Omni server via a Wireguard tunnel waiting on you to make the full install via the UI. Once all nodes connected themselves to my Omni instance I just had to click ‘create cluster’. And once nodes are in the system I can reconfigure (clear, remove/add to a cluster, update…) as much as I want needing a new PXE boot or a fresh ISO. And it can handle many clusters and even automatically setup Wireguard networking in between nodes for a hybrid setup between the cloud and on-prem. It also has native support of Hetzner which ill will servantly test out. The only downside is that Omni is not free for production use. But for homelab it’s perfect (up to now).

Hardware is ‘done’ now, next step: lots of yaml’s.

#1761593595

Recently tried s.p.l.i.t, a new game by Mike Klubnika, the same dev behind Buckshot Roulette. I highly recommend going in blind, it only costs about €3 and takes around an hour to finish (if you know how terminals work). It is true psychological horror. The soundtrack was also an instant-buy and adds a lot to the game for me. That’s all, go play it.

s.p.l.i.t is a short psychological horror game, where you attempt to gain root access into an unethical superstructure.

#1760905046

New bare metal Kubernetes cluster for my homelab. I got 5 cheap Dell OptiPlex micro pc’s second hand. i7-4785T, 12 GB DDR3 memory and 250GB SATA SSD each. Still setting everything up but it looks promising. More about the setup coming…

#1759436893

Just finished Hollow Knight: Silksong, true ending (90%, 45 hours), and it was worth all the years of waiting. Still planning to fully complete the game to 100% and grab some extra achievements. Even without any DLCs, the game is so big. I’m really looking forward to exploring those last bits. And the soundtrack by Christopher Larkin is, of course, as beautiful as always.

#1758658600

I am working on a full overhaul of my homelab and server setup (more posts about it will follow). I want to make things more concise, starting with a strong base on top of the runtime platform (Docker or Kubernetes). So I started with the reverse proxy, which is an easy choice: Traefik. It’s easy to use, stable, and cloud-native; it checks all the boxes.

Next up is some form of centralized authentication. Mostly I just want an OIDC server with its own user management. I use this for single sign-on for my services that I run and for proxy-level authentication to secure services that should be more secure or don’t have any built-in auth (like the Traefik dashboard). I have been running Authentik for 6 months now, but it is overcomplicated and resource-hungry. I don’t get why so many homelabbers rave about this. It is a great project and it works perfectly, but it is also built for enterprise scale with a huge amount of customizations and integrations. I don’t need all that, and it is eating my CPU and memory (it’s written in Python).

So, time for something else. Pocket ID works great: it is simple, clean, fast, and good-looking. It only uses passkeys, so it is secure by default. And with a plugin, it can work with Traefik. For my current setup, it is almost perfect. But there is one thing: it is not fully declarative and doesn’t work super well with Kubernetes. But if you don’t care about declarative configs or high availability (which should be the case for most homelabs), I highly recommend it. I have been using it with my current setup and it works great.

But we are still in search of something for Kubernetes. I heard a lot of good things about Authelia, so I tried it. Lightweight, they are working on a Helm chart, written in Go. It was all looking good until I started the config part: it needs an LDAP server. One more component to add, which added more complexity again. I wanted to stay light, so I added lldap, a lightweight LDAP server written in Rust. It did work, but still needing an LDAP server felt archaic (because it is). And I don’t like the split between user management and authentication management.

The search continued until I ran into Rauthy. It’s lightweight, simple to set up, and puts heavy emphasis on passkeys and very strong security in general. Written in Rust to be as memory-efficient, secure, and fast as possible. It directly supports ForwardAuth, so no plugin needed this time (one less dependency). And it uses an embedded distributed SQLite database, so ready for high availability without running any external database. And the nice admin UI, audit logs, and auto-IP blacklisting are nice bonuses on top. I am still testing it, but so far this seems perfect for what I need.

#1757190021

I recently updated the mb container image. It’s now just 4.91 MB (compressed), down from the previous 8.4 MB. This was possible by moving from an Alpine based container to a from scratch container. Go compiles to a static binary if you don’t need CGO, but I was using CGO because I needed some libc (musl/gnu). The next step was switching to a different SQLite driver, since that was my only C dependency.

There are several pure Go SQLite drivers, some are full rewrites in Go, while others are C code transpiled to Go. The solution I chose is ncruces/go-sqlite3, which compiles the entire SQLite project to WebAssembly and runs it in wazero, a zero dependency wasm runtime for Go. This way, we’re still running C code but without linking it, so no CGO.

I haven’t tested performance yet, but it seems fine so far. I’m planning to create a test suite using k6 for both functional and load testing.

There’s also some room for further size improvements. The default SQLite wasm binary includes all optional extensions, but I don’t need all of them (just math), so building a custom binary could shrink the image even more.

#1756735754

I don’t remember, what’s the agenda?

#1755277258

New vacation dump: Porto, Portugal

sqr_dump_5

#1754342475

I like the style of this guy, also fits very well with this blog. This is the original video, check his channel for lots of funky/experimental video’s. I just wanted a small snipped here because you never know with those small channels. Did it with some quick and dirty yt-dlp + ffmpeg.

2010 forever

#1753545757

I recently implemented ThumbHash in my project. I’ve been searching for a solution like this for a while. Initially, I looked into the more popular BlurHash, but I found the implementation to complicated (using base83 etc.), and I wasn’t a fan of rendering via a canvas element. I also had my doubts about performance when loading 100’s of these.

Then looked into my own implementation based on bitmaps (.bpm) with a static header that is coded into the frontend for extra compactness. This would be in the ballpark in terms of the amount of bytes over the wire. And it would have the least overhead on the frontend. Just concat the header with the payload and render it as a base64 encoded in html natively.

ThumbHash works in a similar way, using base64 PNG images that are generated on the frontend. While this introduces a bit more overhead compared to my bitmap solution, the result is almost half the size of my bitmaps and offers more flexibility, since it doesn’t require a hardcoded header.

I highly recommend checking out the ThumbHash site if you’re interested.

#1752961357

Speed

#1752783987

I made an unholy oneliner to scrape, download and upload some data for my current project. Works fine on linux, not on my Windows machine :\. It would be in all aspects better as a bash script or even a python script (cross platform). But what’s the fun in that, this is way cooler. Even though I will probably rewrite something similar in python so I can also run it on my windows machine. But it is good to keep my shell skills sharp.

seq 1 4 | xargs -I% sh -c 'echo "Fetching page %" >&2; curl -s "https://www.last.fm/charts/weekly?page=%"' \

| pup 'td.weeklychart-name a.weeklychart-cover-link attr{href}' \

| sed 's|^|https://www.last.fm|' \

| xargs -I {} sh -c 'echo "Processing artist URL: $1" >&2; curl -s "$1" | pup "h1.header-new-title, div.header-new-background-image"' _ {} \

| pup 'json{}' \

| jq '[ .. | objects | select(.tag=="h1" or .itemprop=="image") ] as $list | [ range(0; $list|length; 2) | { name: $list[.].text, img: $list[. + 1].content } ]' \

| tee artists.json \

| jq -r '.[].img' \

| xargs -n 1 sh -c 'echo "Downloading image: $1" >&2; wget -q "$1"' _ && \

jq -j '.[] | .img |= (split("/")[-1]) \

| @json, "\u0000"' artists.json \

| xargs -0 -I{} sh -c 'img=$(printf "%s" "$1" \

| jq -r .img); name=$(printf "%s" "$1" \

| jq -r .name); echo "Adding $name"; curl -s -X POST -H "Authorization: Bearer $TOKEN" -F "img=@$img" -F "json={\"name\":\"$name\"}" http://localhost:3000/api/artists/add' _ {}

#1752094992

Survived the Tour du Mont Blanc (not 100% but close enough), second time is the charm. 6 days, 155 km, 10 km elevation, 10 kg backpack. Didn’t take that many pictures but still have some nice ones.

sqr_dump_4

#1750953925

I was bored on the train so I made an edit of my car. Ironically, of course…

#1750157503

Hear me? I want sugar in my tea!!

#1749396915

I tried Ent again… I know I said that I was done with ORMs, but people were telling me that it shouldn’t be that bad, so I gave it another chance. Again with Ent, because I feel like it’s the only ORM that has a chance to work with everything I want to use it for. And up to now, it is still holding up, it hasn’t been a blocker yet for this project. Performance is fine, of course there is overhead but nothing major (unlike SQLAlchemy, which halves your performance…). It can now handle many-to-many relations with custom join tables without a problem. I was also able to change some codegen that I didn’t like (omitempty on boolean fields) using a codegen hook, and add a runtime hook for some custom row-level validation (see codeblock). The function signature of the hooks takes some getting used to, but as long as it works, it’s fine by me. I also like the bulk insert API, it is really flexible. So overall I am happily using it but I am staying watchful about any possible limitations.

func (Image) Hooks() []ent.Hook {

return []ent.Hook{

hook.On(

func(next ent.Mutator) ent.Mutator {

return hook.ImageFunc(

func(ctx context.Context, m *gen.ImageMutation) (

ent.Value, error) {

_, hasRelease := m.ReleaseID()

_, hasArtist := m.ArtistID()

if hasRelease == hasArtist {

if hasRelease {

return nil, fmt.Errorf("but both were provided")

} else {

return nil, fmt.Errorf("neither was provided")

}

}

return next.Mutate(ctx, m)

})

},

ent.OpCreate|ent.OpUpdate|ent.OpUpdateOne,

),

}

}

#1748514616

First time playing the Yakuza games, would recommend. The karaoke is fun.

#1747598386

I have spent far too much time designing a custom ID type for my current project. I wanted to use it as the primary key in a SQLite database, which imposes some constraints. Specifically, the ID must fit within 63 bits, since SQLite only supports signed integers and I want to avoid negative values. (Technically, negative IDs would work, but they’re not ideal.)

You might be thinking, “Why not just use a BLOB as the primary key? That gives you much more flexibility.” And that’s a fair point, but I am intentionally avoiding that because of how SQLite handles its hidden rowid. When you use an integer as the primary key, SQLite internally aliases it to the rowid, which makes operations significantly faster. Using a BLOB would remove that performance advantage and make the database larger.

So the next step is choosing the bit layout. The first bit is unused to prevent negative values. Then I went with 43 bits for a Unix millisecond timestamp. This gives me 278 years of ranges, should be plenty. Using the default Unix epoch this will work until the year 2248. It will outlive me so that is more than enough.

The remaining 20 bits are random, which gives 1_048_576 possible values. I am using random values because I don’t want to keep track of state (as with an autoincrement), and my current system can handle collisions. It is still possible to swap approaches down the road while keeping the already generated IDs. 1_048_576 Sounds like a lot, but this gives a 1% chance of a collision occurring when only generating 146 IDs. Then again, those IDs would need to be generated within the same millisecond. I am not expecting that much volume.

Bit | 63 (MSB) | 62 ... 20 | 19 ... 0 | some 1JTWRZPBJ4DSE

-----|----------|-----------|----------| example 1JTWRZSPA1NBS

Use | Unused | Timestamp | Random | IDs 1JTWRZTQSE9G6

Size | 1 bit | 43 bits | 20 bits | -> 1JTWRZVY5R7RT

The reason for using a timestamp in the leading bits is to minimize B-tree rebalances. As time advances, the generated IDs grow in sequence, allowing the B-tree to insert new entries without reorganizing older pages. By contrast, a completely random primary key (like a UUID v4) forces the B-tree to rebalance frequently, which can significantly degrade database performance.

Finally, the string representation: I chose Crockford’s Base32 (without the check digit). Just 13 characters to represent a int64. To me it’s practically perfect from a technical standpoint, and I like how the IDs look. I know aesthetics shouldn’t matter, but this is my project. So I set the rules, and I want things to look cool and and have some aesthetic appeal. Looks way better than those stupid UUIDs.

One final note, please store your IDs in a binary presentation (BLOB or integer). It hurts me every time I seed a ID stored as its string representation. It is way slower and waists storage. It mainly happens with UUIDs, most people don’t realize it actually is a binary ID and not a string. Even the spec states it but I guess people just don’t read it.